The MedMal Room: Ten Preventable Cases on L&D with GAI

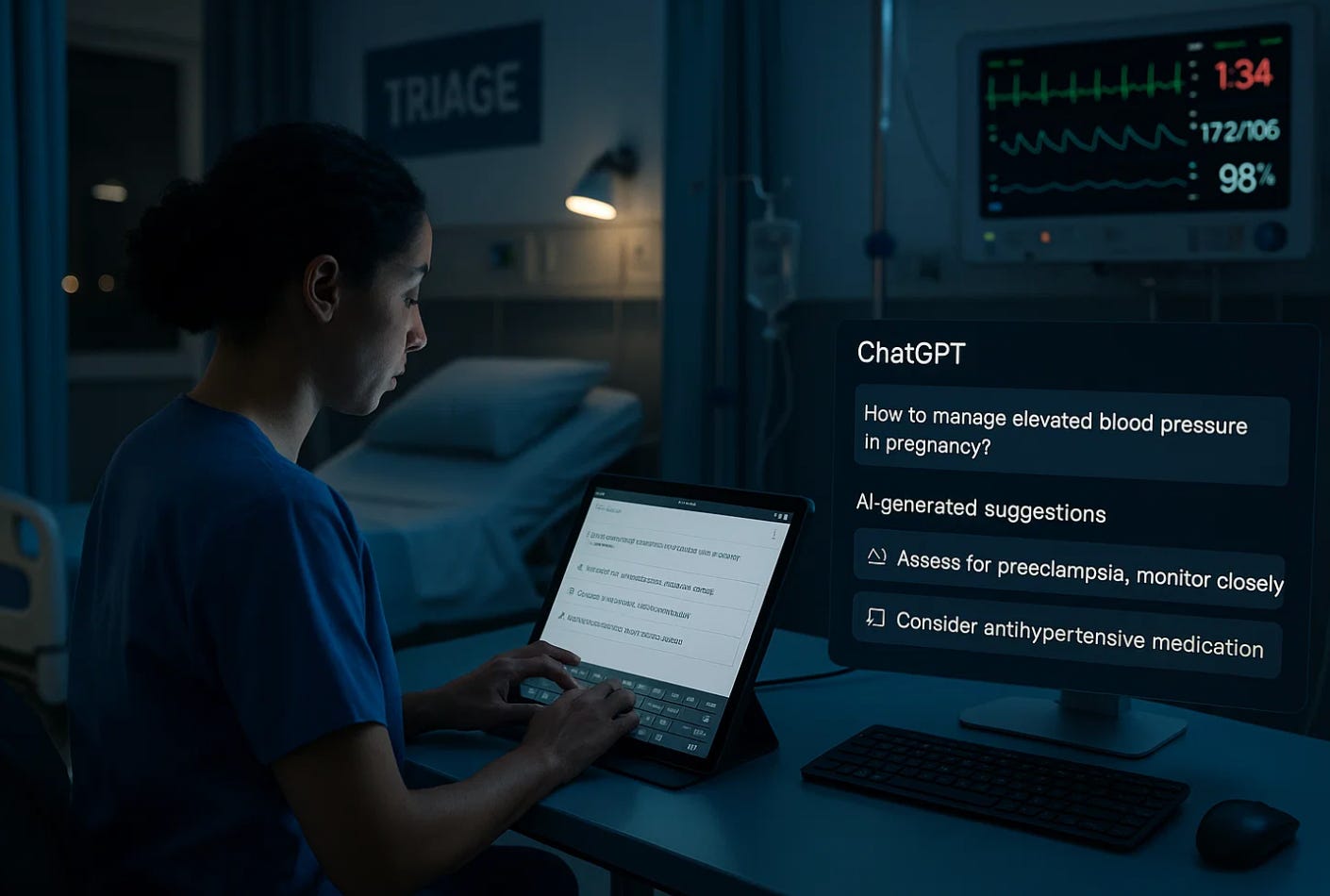

The Safety Ledger — When ChatGPT-level reasoning could have prevented harm.

A resident once said during a morbidity meeting, “We didn’t think of it.”

That sentence, more than any lab value or monitor trace, explains why preventable harm persists. On labor and delivery units, the pattern is rarely ignorance, it’s cognitive overload. Atypical symptoms are dismissed as “just pregnancy,” abnormal findings are attributed to “artifact…