Smarter Patients, Safer Care: How Generative A.I. Can Empower Every Conversation

When patients use A.I. wisely, information becomes a bridge—not a barrier—between doctor and patient. The Responsibility Clause — Ethical reasoning at the crossroads of autonomy, and professionalism.

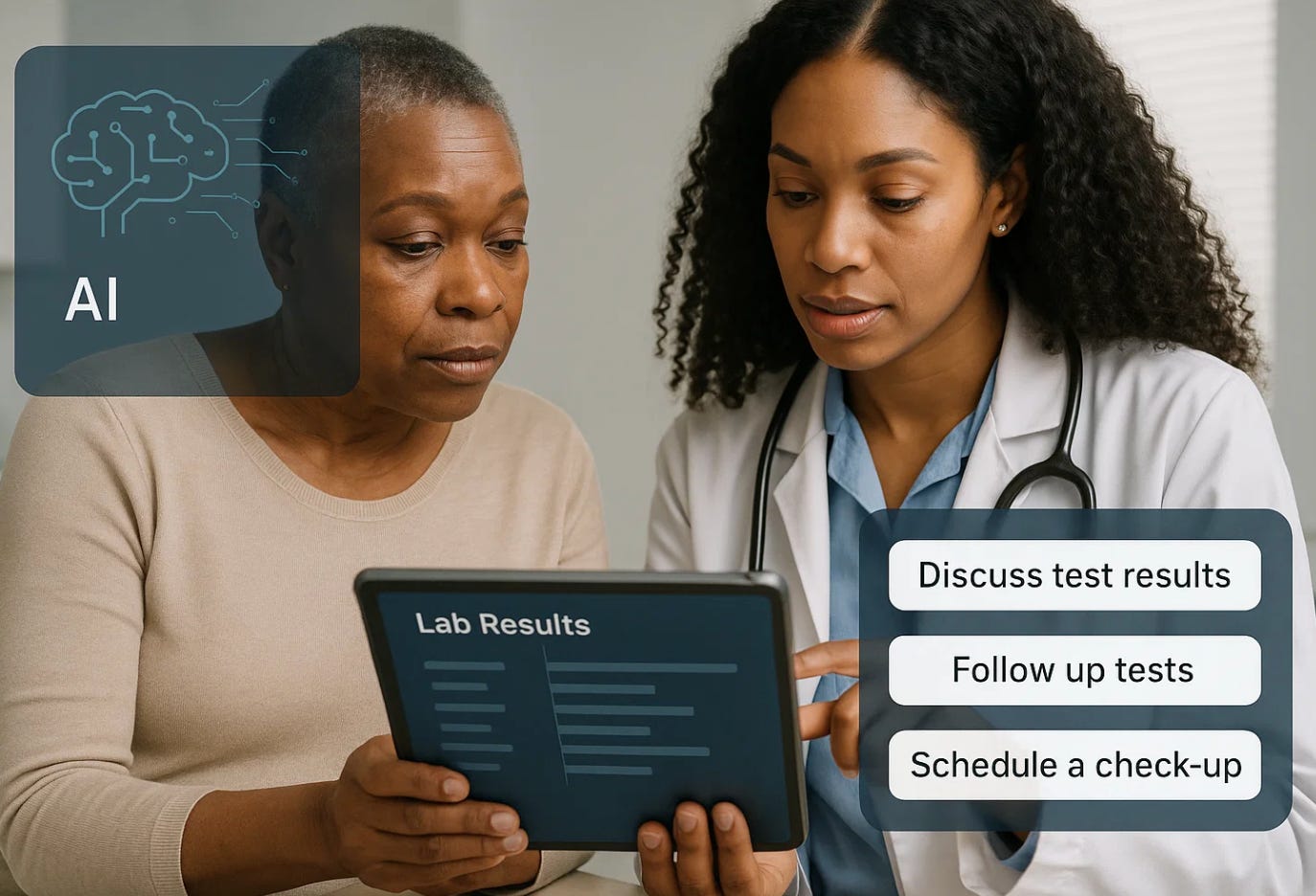

Every day, patients type questions about their health into search engines. The results are often confusing, sometimes terrifying, and rarely personalized. Generative artificial intelligence (A.I.) like Claude or ChatGPT can change that equation. Properly used, it can help patients understand test results, prepare better questions, and follow through with care. But it can also mislead if used without structure or oversight. In obstetrics and gynecology—where clarity and timing often determine outcomes—knowing how to use A.I. safely is becoming a new kind of health literacy.

Generative A.I. tools can summarize medical reports, explain terms in plain language, or prepare questions for an upcoming prenatal or gynecologic visit. Unlike a web search, which gives thousands of unfiltered links, generative systems synthesize and contextualize. The key, however, lies in how patients ask questions. The same technology that can translate medical jargon into human language can also produce false confidence if prompted vaguely. “Tell me what’s wrong with me” is dangerous. “Explain what this Pap test result means and when I should call my doctor” is responsible.

Physician Responsibility: Guiding the Safe Use of Generative A.I.

It is not enough for patients to use technology wisely; physicians have a professional duty to guide them in doing so. In the same way that we counsel patients about medication safety, diet, or prenatal exercise, clinicians now share an ethical obligation to teach how to use generative A.I. responsibly. That means recommending trusted platforms, explaining their limits, and helping patients recognize misinformation and bias. Providing “digital literacy” is part of informed consent in the twenty-first century. A doctor who simply warns that “A.I. can be wrong” misses an opportunity; a better approach is to show how to ask questions clearly, confirm results with evidence-based sources, and bring A.I. summaries to appointments for discussion. By doing so, clinicians strengthen shared decision-making and reduce the harm of unsupervised information seeking. The duty of beneficence extends beyond diagnosis to ensuring that patients can navigate a digital world without confusion or fear. In this new landscape, professionalism includes helping patients use A.I. safely, critically, and compassionately—because empowering them to understand is itself a form of care.

In obstetrics, where prevention and early intervention save lives, the right A.I. use can change outcomes. A patient who receives an abnormal HPV test can upload her report to a secure, private A.I. system that summarizes it, quotes ACOG or ASCCP guidelines, and drafts a question list for her clinician. A woman with gestational diabetes can use A.I. to interpret blood sugar logs and understand when a pattern might need attention. Parents can track fetal movement patterns or review evidence on preeclampsia warning signs with clarity, not panic. In each scenario, the goal is empowerment, not replacement.

Ethically, this represents a shift in shared responsibility. When patients understand what their data mean, conversations become partnerships rather than one-sided explanations. Clinicians benefit, too: a patient who arrives informed is more likely to follow recommendations and less likely to misunderstand uncertainty as neglect. But the risks remain real. A.I. can fabricate sources, oversimplify risk, or sound authoritative when it is wrong. That is why the principle should be: Use A.I. to learn, not to decide.

Clinicians should encourage structured use. Hospitals could offer “trusted A.I. companions” integrated with official patient portals, designed to explain results in evidence-based language and flag dangerous misinformation. The same tools could guide patients to guideline-based resources, track testing intervals, and even generate reminders—bridging the gap between data and care.

In the new age of medicine, empowering patients with A.I. is not optional; it is ethical. A well-informed patient can catch what a system might miss. A confused one can fall through the cracks. The physician’s role is not to guard information but to help patients navigate it safely.

And always ask Ai to provide you the information in ‘plain language’ which means a language that the average person understands.

20 Best Prompts for Patients Using Generative A.I. Responsibly

For understanding test results

“Explain this Pap smear report in plain language and tell me what follow-up ACOG recommends.”

“Summarize my HPV result and whether it needs repeat testing or colposcopy.”

“Interpret this prenatal ultrasound report and list any findings that need doctor discussion.”

“What does this gestational diabetes log mean, and when should I call my provider?”

“Explain my blood pressure readings and what counts as high during pregnancy.”

For preparing appointments

6. “Help me make a list of questions to ask my obstetrician about my next prenatal visit.”

7. “What should I ask before scheduling an induction or cesarean section?”

8. “Summarize the pros and cons of each birth control option based on medical evidence.”

9. “Explain my lab results (upload attached file) and what lifestyle steps I can discuss with my doctor.”

10. “Write a short message I can send to my provider to clarify my test result.”

For safety and symptom tracking

11. “List early warning signs of preeclampsia and when to check blood pressure at home.”

12. “Create a table to track fetal movements daily and flag when to call the hospital.”

13. “Explain what vaginal bleeding in early pregnancy might mean and when it’s an emergency.”

14. “Describe normal versus concerning symptoms after delivery.”

15. “Explain how to recognize postpartum depression and how to seek help.”

For empowerment and follow-up

16. “Summarize ACOG’s cervical cancer screening schedule for my age.”

17. “Explain what a colposcopy is and what to expect during and after.”

18. “Write a safe plan to track my Pap and HPV tests so I never miss follow-up.”

19. “List questions to ask if my doctor says I have fibroids or an ovarian cyst.”

20. “Explain how to read my patient portal safely and what results I should always review with a clinician.”

Closing Reflection

Generative A.I. can democratize understanding without undermining expertise. The difference lies in intent. When used to interpret, not diagnose; to prepare, not replace; to clarify, not command—A.I. becomes the patient’s ally and the clinician’s partner. The future of obstetrics may well depend not on how smart the machines become, but on how wisely patients and doctors use them together.